Role of Familiarity in Recognizing Faces and Words: an EEG Study

The ability to recognize faces and words is crucial for social communication. A close relationship between the face recognition and the word recognition has been documented in numerous studies involving EEG, evoked potentials, functional MRI, as well as clinical data in patients with impaired face perception who suffered from some word recognition inability. Therefore, identifying common mechanisms underlying the recognition of verbal and non-verbal stimuli is relevant not only for normal physiology and cognitive neuroscience, but also for the clinical conditions of agnosia of various etiologies.

We hypothesized that EEG patterns related to the recognition of words and faces might be influenced by both the stimulus-type factor (word or face) and the factor of familiarity.

The aim of the study is to identify the EEG patterns responsible for the perception and recognition of a visual stimulus, regardless of its specificity, i.e. the common patterns for verbal (words) and non-verbal (faces) stimuli.

Materials and Methods. The EEG data were obtained from 26 volunteers who were presented with complex visual stimuli, i.e., photos of people with words superimposed on them, where familiar (known) and unfamiliar people and words were combined in equal parts. Firstly, the tested subjects were asked to classify the faces into familiar or unfamiliar (with the attention only to faces); secondly — to classify the words in the same way (the attention only to words).

Results. We found a pronounced effect of familiarity on the EEG patterns: the amplitude of the N250 component of evoked potentials detected in the frontal areas was significantly greater in the responses to unfamiliar stimuli (both faces and words) compared to familiar ones. We also found an effect of instruction on the responses:the N400 component amplitude was greater in responses followed the “attention to words” instruction as compared to the “attention to faces” instruction; this effect was also best detectable in the frontal sites.

Conclusion. At the early stages of the visual stimuli recognition, the evoked potentials responses are modulated by the familiarity of the stimuli (that is, by their representation in long-term memory), and not by their type (face or word). The categorization of stimuli by their modality (verbal or non-verbal), apparently, occurs at later stages of their processing.

Introduction

The ability to recognize faces and words is crucial for social communication. According to the common opinion in the literature, structures of the left hemisphere are mainly responsible for the recognition of written words, whereas the right hemisphere controls the recognition of faces [1–4 and others]. Numerous psychophysical and neurophysiological studies indicate that such localization is due to the qualitative differences in these stimuli. It was found that the left hemisphere is primarily responsible for recognizing the abstract categories with associative links between the separate elements; the right hemisphere is functioning to recognize specific subject categories based on spatial relationships between the elements [5]. These data, seemingly, allow one to correlate the facial recognition process with the area located in the fusiform face area in the right hemisphere [6], and the word processing — with the respective area of the fusiform gyrus in the left hemisphere (visual word form area) [3] and thus suggest the existence of two independent high-level mechanisms of visual processing.

However, there are more and more studies that call into question such an outright separation. For example, despite the established lateralization of these two functions, the presentation of a stimulus (either a word or a face) usually activates both hemispheres, and there are overlapping fusiform face area and visual word form area zones in both hemispheres [7, 8]. Therefore, the same processes may be involved in recognizing faces and words [9]; and there are clinical data indicating this possibility. For example, back in the 1990s, cases of complicated visual agnosia with impaired perception of faces combined with impaired recognition of objects and words were described [10, 11]. Another report [12] presented a patient with facial and verbal agnosia, which had developed after a head injury in childhood; notably, in this patient, the recognition of objects was not impaired. To date, quite a few cases of impaired face perception resulted from brain injury (acquired prosopagnosia) accompanied by a word perception failure have been reported (see, for example, review [13]). In addition, there is evidence that patients with alexia suffer from partial failure of the facial recognition ability [14].

The method of evoked potentials (EP) is widely used to study the process of facial detection and recognition. The EPs generated in the brain by the face-depicting stimuli are used by researches in various experimental paradigms. These signals reflect several processing steps. The earliest component of the EP, which is traditionally associated with perceptual processing of facial images, is the so-called component N170. This is a negative wave with latency of 130–200 ms that reaches its maximum in response to facial images and can be detected in the occipital-parietal sites [15]. Some authors believe that at the N170 stage, the brain detects the face in the image and extracts it from the background; these processes are attributed to the primary processing, which does not involve the recognition step [16, 17]. However, other studies showed that the N170 amplitude was modulated not only by the type of a stimulus, but also by its representation in long-term memory, i.e. familiarity: specifically, the N170 amplitude was greater when faces of well-known people (but not strangers) were presented [18–20]. Therefore, it is quite possible that in the first 100–200 ms of facial image presentation, the recognition process is already under way.

A number of studies have found that visually exhibited words cause a more pronounced negative wave as compared to other stimuli [21–24]; this wave has a latency of about 170 ms (it is also called the N1 component, or the recognition potential). In another report [25], the similarity between the N170 features was found in the responses to faces and words.

The next time window where the responses modulated by familiarity/unfamiliarity with faces can be seen is 200–300 ms from the beginning of the stimulation. Within this time range, a component called N250 is recorded; the N250 manifests in response to multiple repetitive stimuli and, therefore, it is also called N250r — repetition. In the context of familiarity, the N250 amplitude was greater in responses to familiar faces as compared to unfamiliar ones in the occipital-temporal (P8 [26], P10 and TP10 [27]), as well as in the parietal, central, and frontal electrode sites [28, 29]. The N250 component is thought to reflect the process of representation of a facial pattern for further recognition and can be considered a kind of familiarity index [30].

Further processing of information about visual stimuli and their correlation with traces stored in long-term memory is believed to occur in about 350–450 ms after the start of the presentation. This process is associated with a component recorded in the frontal areas; this component reaches its peak at about 400 ms, and its amplitude depends on whether or not the stimulus is familiar to the subject. This observation has been confirmed in a number of studies, where both faces and words were presented [16, 31–34]. In the literature, this component is called the “midfrontal old-new effect” [35] or more often N400f (familiarity), which distinguishes it from the “classic” N400. The latter is associated with rather the semantic processing and the context of a stimulus, although studies show a close relationship between these two components [36]. The N400f component is believed to be an electrophysiological marker of information processing in the semantic memory: i.e., when a familiar stimulus is recognized, the semantic information stored in the long-term memory is activated. A number of reports indicate that the N400f amplitude and localization may depend on the type of a stimulus; still it is not modal-specific that is. It appears with both types of the stimuli [33, 37].

Notably, in studies where both words and faces were presented, no direct comparison between the responses to these two stimuli was made; probably, those experimenters had different aims or such a comparison was technically impossible because of the different physical characteristics of verbal and non-verbal stimuli. For example, in a recent study [38], it was shown that during the process of recalling of familiar faces and words, the measured EPs were in many ways similar for the two types of stimuli. The difference between the two EEG patterns was due to the presence/absence of the stimulus in the long-term semantic memory (familiar/unfamiliar) rather than to the specificity of the stimulus (a face or a word). However, the conclusions were drawn indirectly, and the interpretation of the results was somewhat speculative: the EP responses to the recollection of familiar faces had more in common with EPs caused by recalling words than with EPs caused by recalling unfamiliar faces.

The aim of the study is to identify EEG patterns responsible for the perception and recognition of a visual stimulus, regardless of its specificity, i.e. the common patterns for verbal (words) and non-verbal (faces) stimuli.

Materials and Methods

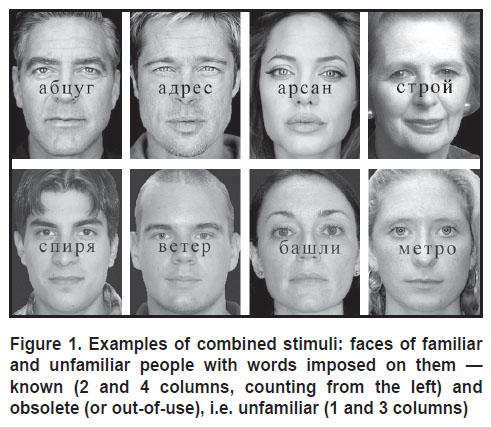

Toward this goal, we develop a combination of verbal and non-verbal stimuli that enabled us to simultaneously present faces and words and search for a recognition pattern common to these two visual stimuli. We used photos of familiar and unfamiliar faces with words (familiar and unknown) superimposed on them. The “unfamiliar faces” of men and women (50/50) were taken from free databases: the FEI Face Database (http://fei.edu.br/~cet/facedatabase.html) and the CVL Face Database (http://www.lrv.fri.uni-lj.si/facedb.html). Celebrity images (actors, politicians, athletes, musicians) with neutral facial expressions were taken from the accessible Internet resources and used as the “familiar faces”. Pre-selected 120 celebrity photos in black and white were presented to 40 volunteers who did not participate in the main part of the experiment. Their tasks were to guess who was depicted in the photo. As a result, 80 of the most recognizable photos were selected (40 men and 40 women).

All verbal stimuli were five-letter words in Russian. Words with a frequency higher than 19 uses per million words of the linguistic corpus (ipm — instances per million) and an average frequency of 109 ipm (in the frequency dictionary [39]) were used as “familiar”. The “unfamiliar” words were selected from the Internet resource http://www.zabytye-slova.ru/. The list of 160 words was submitted to six experts (6 experts in the Russian language with academic degrees in philology), whose task was to erase familiar words from the list. In the final list, one hundred words remained; from those, words consonant with international brand names (for example, “cayenne”) were deleted. The final list of stimuli included 80 unfamiliar words.

Based on the selected photos and words, 160 combined images were compiled. In those, the words were superimposed on the faces; the familiar and unfamiliar stimuli (both words and faces) were represented evenly (Figure 1).

The study involved 26 volunteers aged 19–35 years, with normal (or adjusted to normal) vision (12 men and 14 women). The participants were familiarized in advance with the experimental setup and informed about the possibility of terminating the experiment at any time, as well as about the confidentiality of the data obtained. All volunteers signed written consents to participate in the experiment. The study was conducted in accordance with the Helsinki Declaration (2013) and approved by the Ethics Committee of Saint Petersburg State University.

During the study, participants were intermittently presented with 160 black-and-white images displayed on a computer screen located at a distance of 70 cm from the subject. All images were aligned by brightness and size (305×408 pixels) and presented against a gray background. Before every next sample, a fixation spot appeared on the screen at a time-point of 800–2300 ms (the presentation time varied randomly); then a stimulus was presented for 200 ms followed by a mask of white noise for 2000 ms. Thus, the presentation of one sample lasted from 3000 to 4500 ms.

The experiment included two sessions of 80 stimuli each. In one session, subjects were tasked with answering whether the presented word was familiar to them or not, without paying attention to the presented face. In another session, they were asked, whether the presented face was familiar or not, ignoring the presented word. The subjects used a joystick to choose the answer. There were no repeated stimuli in these two sessions. The task sequences were balanced between the participants: half of them performed the word task first and then switched to the faces; the other half, on the contrary, started with the facial recognition test and then switched to the words. The participants were instructed to keep looking at the cross in the center of the screen and refrain from blinking while viewing the stimulus, until the answer button had been pressed.

To record the EEG and the brain EP, the Neurovizor BMM-52 multi-channel electroencephalograph (MKS, Russia) was used. The recording was performed using the monopolar mode with 38 sites and 2 ear-located references (A1, A2) with a maximal resistance of up to 40 kΩ. The signal sampling rate was 1000 Hz. Two electrodes for monitoring the eye movements were located near the right eye. Correction of oculomotor artifacts was performed automatically by the Neocortex software. In addition to the electrical activity, the accuracy of responses was determined.

Results

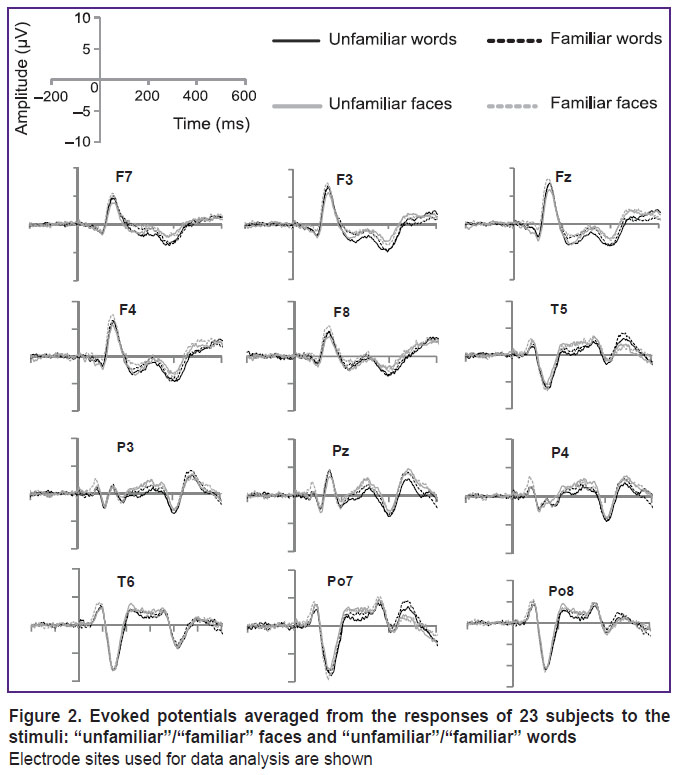

After the initial analysis of EEG indicators and the participants’ responses, the data on three subjects were excluded from the final analysis due to a large number of artifacts or, in one case, due to the predominance of the “unfamiliar” responses to images of well-known persons. The EP analysis was performed only for subjects with answers corresponding to the specified categories of the stimuli — “familiar”/“unfamiliar”. All samples with missing or incorrect answers were excluded from further analysis. The EP values were averaged for each site and for each experimental condition. Based on the literature, three time windows were selected for analyzing the amplitude of the EP wave: 100–200, 200–300, and 350–450 ms. These ranges include the N170, N250 (or N250r), and N400 (N400f) components. In each time window, the average EP amplitude was calculated. Two-factor analysis of variance with repeated measurements (RM ANOVA) was used for statistical processing. There, the familiarity (familiar/unfamiliar) and the type of stimulus (face/word) were used as the two factors. Figure 2 shows the average values of EP responses in four groups of examined subjects.

|

Figure 2. Evoked potentials averaged from the responses of 23 subjects to the stimuli: “unfamiliar”/“familiar” faces and “unfamiliar”/“familiar” words

Electrode sites used for data analysis are shown |

Under all experimental conditions, a pronounced N170 wave (time window 100–200 ms) in the parietal-occipital sites (Po7, Po8), and the VPP component (vertex positive potential) — in the frontal sites (Fz, F3, F4, F7, F8) were detected. At the same time, there were no significant differences between the familiar and unfamiliar stimuli in the parietal-occipital sites (F(1, 22)=1.076; p=0.311); the differences between the words and faces were at the borderline of statistical significance (F(1, 22)=3.617; p=0.070). Along with that, the interrelation between the familiarity factors and the type of stimulus was found significant (F(1, 22)=4.518; p=0.045). Using the paired comparison mode, we found a significantly greater amplitude of the N170 component with the familiar faces compared to unfamiliar ones (F(1, 22)=4.393; p=0.048). For words, no significant differences between the familiar and unfamiliar ones were found (F(1, 22)=0.766; p=0.391). In the frontal sites, the familiarity factor turned out to be significant (F(1, 22)=9.297; p=0.006), but the type of stimuli wasn’t (F(1, 22)=0.375; p=0.547). A significant interrelation between the familiarity and the type of stimulus was also found (F(1, 22)=6.010; p=0.023). Under the paired comparison, a significant difference in the VPP amplitude between the familiar and unfamiliar faces was revealed (F(1, 22)=14.713; p=0.001); here, the VPP amplitude was greater for familiar faces. No differences in the VPP amplitude between the familiar and unfamiliar words were significant (F(1, 22)=0.114; p=0.738).

In the 200–300 ms window (N250), the analysis involved the frontal (Fz, F3, F4, F7, F8), parietal and temporal (Pz, P3, P4, T5, T6) sites. In the frontal sites, a significant impact of the familiarity factor (F(1, 22)=29.910; p<0.001) with a greater N250 amplitude for the unfamiliar stimuli was detected; the effect of the stimulus type was not found (F(1, 22)=2.664; p=0.116). In this time window, no interrelation between the factors was detected (F(1, 22)=0.486; p=0.493). In the parietal sites, no effect of familiarity was found (F(1, 22)=0.940; p=0.343), but a correlation with the type of stimuli was (F(1, 22)=9.360; p=0.006). Thus, the responses to unfamiliar words had greater negative amplitudes as compared with the responses to faces. No interrelation between the two factors was identified (F(1, 22)=1.881; p=0.184).

In the 350–450 ms window (N400), we analyzed the data obtained from the frontal and parietal sites. In the frontal sites, no significant familiarity effect was detected (F(1, 22)=0.016; p=0.900), but the stimulus effect was (F(1, 22)=13.438; p=0.001): the N400 amplitude was greater under the “attention to words” condition as compared with the “attention to faces”. Interrelation of the factors was not found (F(1, 22)=1.910; p=0.181). In the parietal sites, no significant differences were ever found (F<1).

Discussion

In this study, we identified three stages of recognition of visual stimuli by the examined subjects. At the first stage, the best EEG correlate is the N170 component and the positive vertex potential having the same latent period. At this stage (as it is thought), the primary perceptual processing and structural decoding of visual stimuli take place, but it does not include semantic processing. The difference in the facial recognition found in our study and expressed in the larger N170 amplitude with familiar faces, can be explained by the mechanism that, prior to semantic processing, the image of a word, presented together with a face is perceived as non-specific; therefore, all words, both familiar and unfamiliar, are processed in the same way at this stage, although a familiar face can modulate the early EP components at this stage [18–20]. In our experiment, when the subject’s attention was focused on words, the number of familiar and unfamiliar faces was identical in each group (familiar/unfamiliar words), so there were no differences between the answers. When the attention was attracted to faces, all familiar faces fell into one group, while the unfamiliar ones into another. As a result, we observed a difference in the amplitude of the N170 component.

At the next stage, corresponding to 200–300 ms from the start of the stimulation (N250), we found a significant difference in the amplitude of responses to familiar faces and words compared to unfamiliar faces and words in the frontal sites. Thus, the familiar stimuli, regardless of their type, caused more positive EP waves as compared to the responses to unfamiliar stimuli, which all tended to have negative amplitudes.

Usually, studies on familiarity use the paradigm of multiple stimulus repetition (“repetition priming design”), in which the N250 amplitude in parietal, (sometimes central and frontal sites) is modulated by the familiarity/novelty factor. In most reports, familiar faces with multiple repetitions cause a more negative response compared to unfamiliar faces, although some results, like ours, speak for the opposite effect. For example, a study [30] showed that familiar (repeatedly presented) faces caused a more positive wave compared to unfamiliar in the range of 250–450 ms in the frontal sites. In [40], the novelty/familiarity effect was found in the frontal sites when words were used for stimulation. The authors note that the increase in the amplitude in the frontal areas is consistent with the data obtained from the intracranial recording of anterior cortical activity, which indicate the contribution of the prefrontal cortex to the formation of EEG patterns when memory-associated tasks are performed [41]. Their data are also consistent with the results of functional MRI and PET (positron emission tomography) tests, where an activation of the prefrontal cortex was found in the process of facial recognition [42, 43].

In the time window of 350–450 ms (N400), we obtained significant differences between the responses to stimuli with “the attention to faces” and those with “the attention to words”, when probed in the frontal sites. This may indicate that after the representations of faces and words stored in the memory have been activated at the previous stage, now the semantic analysis of visual information is going on. In our experimental settings, this is reflected by the greater response to words than to faces, which is due to the greater cognitive activity associated with semantic analysis of words rather than faces. In addition, it is known [44] that areas of the prefrontal cortex are involved in the implementation of selective attention. In this regard, the differences between the responses to faces and words that we observed in the frontal areas could reflect the effect of instructions given to the subjects about their attention to the words, thus ignoring the faces or vice versa.

Conclusion

Using the method of evoked potentials, we identified EEG-correlates for the successive stages of recognition of verbal (words) and non-verbal (faces) stimuli in the experimental paradigm, which allowed for direct comparison between the responses to these two stimuli and thus eliminated the need for multiple stimulus repetition. This approach enabled us to evaluate the role of familiarity (i.e., the representation of a stimulus in the long-term memory) in stimulus recognition. It can be suggested that at the stage of comparing the visual stimuli with their representations stored in memory, the stimulus is assigned to one of the categories — “familiar” or “unfamiliar” — regardless of the type of a stimulus. At a later stage, the full semantic processing develops; that is reflected in the appearance of the N400 — common for both words and faces — yet with greater amplitude for the words.

Financial support. The study was supported by a grant from the Russian Science Foundation, code IAS NID 37.53.1181.2014.

Conflict of interest. There is no conflict of interest to be reported.

References

- Puce A., Allison T., Asgari M., Gore J.C., McCarthy G. Differential sensitivity of human visual cortex to faces, letter strings, and textures: a functional magnetic resonance imaging study. J Neurosci 1996; 16(16): 5205–5215, https://doi.org/10.1523/jneurosci.16-16-05205.1996.

- McCarthy G., Puce A., Gore J.C., Allison T. Face-specific processing in the human fusiform gyrus. J Cogn Neurosci 1997; 9(5): 605–610, https://doi.org/10.1162/jocn.1997.9.5.605.

- Cohen L., Dehaene S., Naccache L., Lehéricy S., Dehaene-Lambertz G., Hénaff M.A., Michel F. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain 2000; 123(2): 291–307, https://doi.org/10.1093/brain/123.2.291.

- Yovel G., Tambini A., Brandman T. The asymmetry of the fusiform face area is a stable individual characteristic that underlies the left-visual-field superiority for faces. Neuropsychologia 2008; 46(13): 3061–3068, https://doi.org/10.1016/j.neuropsychologia.2008.06.017.

- Dien J. A tale of two recognition systems: implications of the fusiform face area and the visual word form area for lateralized object recognition models. Neuropsychologia 2009; 47(1): 1–16, https://doi.org/10.1016/j.neuropsychologia.2008.08.024.

- Kanwisher N., McDermott J., Chun M.M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 1997; 17(11): 4302–4311, https://doi.org/10.1523/jneurosci.17-11-04302.1997.

- Bouhali F., de Schotten M.T., Pinel P., Poupon C., Mangin J.F., Dehaene S., Cohen L. Anatomical connections of the visual word form area. J Neurosci 2014; 34(46): 15402–15414, https://doi.org/10.1523/jneurosci.4918-13.2014.

- Harris R.J., Rice G.E., Young A.W., Andrews T.J. Distinct but overlapping patterns of response to words and faces in the fusiform gyrus. Cereb Cortex 2015; 26(7): 3161–3168, https://doi.org/10.1093/cercor/bhv147.

- Robinson A.K., Plaut D.C., Behrmann M. Word and face processing engage overlapping distributed networks: evidence from RSVP and EEG investigations. J Exp Psychol Gen 2017; 146(7): 943–961, https://doi.org/10.1037/xge0000302.

- Farah M.J. Cognitive neuropsychology: patterns of co-occurrence among the associative agnosias: implications for visual object representation. Cogn Neuropsychol 1991; 8(1): 1–19, https://doi.org/10.1080/02643299108253364.

- Farah M.J. Dissociable systems for visual recognition: a cognitive neuropsychology approach. In: Kosslyn S.M., Osherson D. (editors). Visual cognition: an invitation to cognitive science. Vol. 2. Cambridge: MIT Press; 1995; p. 101–119.

- Buxbaum L.J., Glosser G., Coslett H.B. Impaired face and word recognition without object agnosia. Neuropsychologia 1998; 37(1): 41–50, https://doi.org/10.1016/s0028-3932(98)00048-7.

- Geskin J., Behrmann M. Congenital prosopagnosia without object agnosia? A literature review. Cogn Neuropsychol 2018; 35(1–2): 4–54, https://doi.org/10.1080/02643294.2017.1392295.

- Behrmann M., Plaut D.C. Bilateral hemispheric processing of words and faces: evidence from word impairments in prosopagnosia and face impairments in pure alexia. Cereb Cortex 2012; 24(4): 1102–1118, https://doi.org/10.1093/cercor/bhs390.

- Rossion B., Jacques C. The N170: understanding the time course of face perception in the human brain. In: Luck S.J., Kappenman A.S. (editors). The Oxford handbook of event-related potential components. Oxford University Press; 2011; p. 15–141, https://doi.org/10.1093/oxfordhb/9780195374148.013.0064.

- Eimer M. Event-related brain potentials distinguish processing stages involved in face perception and recognition. Neurophysiol Clin 2000; 111(4): 694–705, https://doi.org/10.1016/s1388-2457(99)00285-0.

- Engst F.M., Martín-Loeches M., Sommer W. Memory systems for structural and semantic knowledge of faces and buildings. Brain Res 2006; 1124(1): 70–80, https://doi.org/10.1016/j.brainres.2006.09.038.

- Caharel S., Poiroux S., Bernard C., Thibaut F., Lalonde R., Rebai M. ERPs associated with familiarity and degree of familiarity during face recognition. Int J Neurosci 2002; 112(12): 1499–1512, https://doi.org/10.1080/00207450290158368.

- Caharel S., Fiori N., Bernard C., Lalonde R., Rebaï M. The effects of inversion and eye displacements of familiar and unknown faces on early and late-stage ERPs. Int J Psychophysiol 2006; 62(1): 141–151, https://doi.org/10.1016/j.ijpsycho.2006.03.002.

- Wild-Wall N., Dimigen O., Sommer W. Interaction of facial expressions and familiarity: ERP evidence. Biol Psychol 2008; 77(2): 138–149, https://doi.org/10.1016/j.biopsycho.2007.10.001.

- Bentin S., Mouchetant-Rostaing Y., Giard M.H., Echallier J.F., Pernier J. ERP manifestations of processing printed words at different psycholinguistic levels: time course and scalp distribution. J Cogn Neurosci 1999; 11(3): 235–260, https://doi.org/10.1162/089892999563373.

- Maurer U., Brandeis D., McCandliss B.D. Fast, visual specialization for reading in English revealed by the topography of the N170 ERP response. Behav Brain Funct 2005; 1(1): 13, https://doi.org/10.1186/1744-9081-1-13.

- Cao X., Jiang B., Gaspar C., Li C. The overlap of neural selectivity between faces and words: evidences from the N170 adaptation effect. Exp Brain Res 2014; 232(9): 3015–3021, https://doi.org/10.1007/s00221-014-3986-x.

- Zhao J., Li S., Lin S.E., Cao X.H., He S., Weng X.C. Selectivity of N170 in the left hemisphere as an electrophysiological marker for expertise in reading Chinese. Neurosci Bull 2012; 28(5): 577–584, https://doi.org/10.1007/s12264-012-1274-y.

- Rossion B., Joyce C.A., Cottrell G.W., Tarr M.J. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 2003; 20(3): 1609–1624, https://doi.org/10.1016/j.neuroimage.2003.07.010.

- Leleu A., Caharel S., Carré J., Montalan B., Snoussi M., Vom Hofe A., Charvin H., Lalonde R., Rebaï M. Perceptual interactions between visual processing of facial familiarity and emotional expression: an event-related potentials study during task-switching. Neurosci Lett 2010; 482(2): 106–111, https://doi.org/10.1016/j.neulet.2010.07.008.

- Tanaka J.W., Curran T., Porterfield A.L., Collins D. Activation of preexisting and acquired face representations: the N250 event-related potential as an index of face familiarity. J Cogn Neurosci 2006; 18(9): 1488–1497, https://doi.org/10.1162/jocn.2006.18.9.1488.

- Begleiter H., Porjesz B., Wang W. Event-related brain potentials differentiate priming and recognition to familiar and unfamiliar faces. Electroencephalogr Clin Neurophysiol 1995; 94(1): 41–49, https://doi.org/10.1016/0013-4694(94)00240-L.

- Miyakoshi M., Nomura M., Ohira H. An ERP study on self-relevant object recognition. Brain Cogn 2007; 63(2): 182–189, https://doi.org/10.1016/j.bandc.2006.12.001.

- Itier R.J., Taylor M.J. Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage 2002; 15(2): 353–372, https://doi.org/10.1006/nimg.2001.0982.

- Bentin S., Deouell L.Y. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cogn Neuropsychol 2000; 7(1–3): 35–55, https://doi.org/10.1080/026432900380472.

- Yick Y.Y., Wilding E.L. Material-specific neural correlates of memory retrieval. Neuroreport 2008; 19(15): 1463–1467, https://doi.org/10.1097/wnr.0b013e32830ef76f.

- MacKenzie G., Donaldson D.I. Examining the neural basis of episodic memory: ERP evidence that faces are recollected differently from names. Neuropsychologia 2009; 47(13): 2756–2765, https://doi.org/10.1016/j.neuropsychologia.2009.05.025.

- Joyce C.A., Kutas M. Event-related potential correlates of long-term memory for briefly presented faces. J Cogn Neurosci 2005; 17(5): 757–767, https://doi.org/10.1162/0898929053747603.

- Rugg M.D., Allan K. Event-related potential studies of memory. In: Tulving E., Craik F.I.M. (editors). The Oxford handbook of memory. Oxford University Press; 2000; p. 521–537.

- Meyer P., Mecklinger A., Friederici A.D. Bridging the gap between the semantic N400 and the early old/new memory effect. Neuroreport 2007; 18(10): 1009–1013, https://doi.org/10.1097/wnr.0b013e32815277eb.

- Kutas M., Federmeier K.D. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP). Annu Rev Psychol 2011; 62(1): 621–647, https://doi.org/10.1146/annurev.psych.093008.131123.

- Nie A., Griffin M., Keinath A., Walsh M., Dittmann A., Reder L. ERP profiles for face and word recognition are based on their status in semantic memory not their stimulus category. Brain Res 2014; 1557: 66–73, https://doi.org/10.1016/j.brainres.2014.02.010.

- Lyashevskaya O.N., Sharov S.A. Chastotnyy slovar’ sovremennogo russkogo yazyka (na materialakh Natsional’nogo korpusa russkogo yazyka) [A frequency dictionary of contemporary Russian (based on the materials of the Russian National Corpus)]. Moscow: Azbukovnik; 2009.

- Wilding E.L. In what way does the parietal ERP old/new effect index recollection? Int J Psychophysiol 2000; 35(1): 81–87, https://doi.org/10.1016/s0167-8760(99)00095-1.

- Guillem F., N’Kaoua B., Rougier A., Claverie B. Intracranial topography of event-related potentials (N400/P600) elicited during a continuous recognition memory task. Psychophysiology 1995; 32(4): 382–392, https://doi.org/10.1111/j.1469-8986.1995.tb01221.x.

- Courtney S.M., Ungerleider L.G., Keil K., Haxby J.V. Transient and sustained activity in a distributed neural system for human working memory. Nature 1997; 386(6625): 608–611, https://doi.org/10.1038/386608a0.

- Haxby J.V., Ungerleider L.G., Horwitz B., Maisog J.M., Rapoport S.I., Grady C.L. Face encoding and recognition in the human brain. Proc Natl Acad Sci U S A 1996; 93(2): 922–927, https://doi.org/10.1073/pnas.93.2.922.

- Banich M.T., Milham M.P., Atchley R.A., Cohen N.J., Webb A., Wszalek T., Kramer A.F., Liang Z., Barad V., Gullett D., Shah C., Brown C. Prefrontal regions play a predominant role in imposing an attentional ‘set’: evidence from fMRI. Brain Res Cogn Brain Res 2000; 10(1–2): 1–9, https://doi.org/10.1016/s0926-6410(00)00015-x.