Artificial Intelligence Technologies in the Microsurgical Operating Room (Review)

Surgery performed by a novice neurosurgeon under constant supervision of a senior surgeon with the experience of thousands of operations, able to handle any intraoperative complications and predict them in advance, and never getting tired, is currently an elusive dream, but can become a reality with the development of artificial intelligence methods.

This paper has presented a review of the literature on the use of artificial intelligence technologies in the microsurgical operating room. Searching for sources was carried out in the PubMed text database of medical and biological publications. The key words used were “surgical procedures”, “dexterity”, “microsurgery” AND “artificial intelligence” OR “machine learning” OR “neural networks”. Articles in English and Russian were considered with no limitation to publication date. The main directions of research on the use of artificial intelligence technologies in the microsurgical operating room have been highlighted.

Despite the fact that in recent years machine learning has been increasingly introduced into the medical field, a small number of studies related to the problem of interest have been published, and their results have not proved to be of practical use yet. However, the social significance of this direction is an important argument for its development.

Introduction

In recent decades, there has been significant interest in the practical application of artificial intelligence (AI), including machine learning, in the field of clinical medicine. The current advances in AI technologies in neuroimaging open up new perspectives in the development of non-invasive and personalized diagnostics. Thus, methods of radiomics, i.e., extracting a large number of features from medical images, are actively developing. These features may contain information to describe tumors and brain structures which are not visible to the naked eye [1–5]. It is assumed that the correct presentation and analysis of images with neuroimaging features will help to distinguish between types of tumors and correlate them with the clinical manifestations of the disease, prognosis, and the most effective treatment.

Technologies that evaluate the relationship between features of tumor imaging and gene expression are called radiogenomics [6–9]. These methods are aimed at creating imaging biomarkers that can identify the genetic signs of disease without biopsy.

The AI advances in the analysis of molecular and genetic data, signals from invasive sensors, and medical texts have become known as well. The universality of approaches to the use of AI opens up new, original ways of using them in the clinic.

From a technical point of view, the term “artificial intelligence” can denote a mathematical technology that automates the solution to some intellectual problem traditionally solved by a person. In a broader sense, this term refers to the field of computer science in which such solutions are developed.

Modern AI relies on machine learning technologies — methods for extracting patterns and rules from the data representative of a specific task (medical images, text records, genetic sequences, laboratory tests, etc.). For example, AI can find “rules” for predicting poor treatment outcomes from a set of predictors by “studying” retrospectively a sufficient number of similar cases with known outcomes. This AI property can be used in solving tasks of automating individual diagnostic processes, selecting treatment tactics, or predicting outcomes of medical care according to clinical findings.

In medical practice, particularly in surgery, AI, along with surgical robots, 3D printing and new imaging methods, provides solving a wide range of problems, increasing the level of accuracy and efficiency of operations.

The use of AI is even more important in microsurgery, when it comes to interventions on small anatomical sites with the use of optical devices and microsurgical tools.

An AI challenge in microsurgery is the automatic recognition of anatomical structures that are critical for the microsurgeon (arteries, veins, nerves, etc.) in intraoperative photographs, video images, or images of anatomical preparations. The solution to this problem creates prospects for the development of AI automatic alert tools at the risk of traumatization of critical structures during surgery in real time, the choice of trajectories for safe dissection or incisions in functionally significant areas [10].

Artificial intelligence can evaluate handling of surgical instruments, check the positioning of the micro instrument in the surgeon’s hands (its position in the hand, position to the surgical wound), and hand tremor during surgery.

Determining a phase of surgery, predicting outcomes and complications, and creating the basis for an intelligent intraoperative decision support system are prospective goals for AI in microsurgery.

A non-trivial task of using AI in microsurgery is to assess the skills of novice surgeons and residents, as well as improve the skills of more experienced specialists. The solution to this problem, due to the extreme work complexity and responsibility of a microsurgeon, will bring this field of medicine to new frontiers.

To assess the available solutions to the issue of using AI in the microsurgical operating room, an analysis of articles in the PubMed text database of medical and biological publications was performed. Literature search was carried out using the key words “surgical procedures”, “dexterity”, “microsurgery” AND “artificial intelligence” OR “machine learning” OR “neural networks” among articles in English and Russian with no limitation to publication date.

Automatic assessment of the level of microsurgical skills

Continuous training and constant improvement of microsurgical techniques are essential conditions for the formation of a skilled microsurgeon. It often takes most of the professional life to acquire the required level of microsurgical skills [11–13].

Microsurgical training requires constant participation of a tutor who would correct non-optimal actions and movements of the microsurgeon and supervise the learning process. A parallel could be drawn between the training of microsurgeons and Olympic athletes: achieving a high level is impossible without a proper training system and highly qualified coaches. However, due to the high clinical workload and strenuous schedule of skilled microsurgeons-tutors, their permanent presence in the microsurgical laboratory is impossible, and the start of training in a real operating room is in conflict with the norms of medical ethics. In this situation, AI technologies can be used in the learning process to control the correctness and effectiveness of the manual actions of a novice neurosurgeon.

To date, the set of AI technologies that would be adapted for the analysis of microsurgical manipulations is significantly limited. For example, the use of accelerometers attached to microsurgical instruments to assess the level of microsurgical tremor was described in the papers by Bykanov et al. [14] and Coulson et al. [15]. In the work by Harada et al. [16], infrared optical motion tracking markers, an inertial measurement unit, and load cells were mounted on microsurgical tweezers to measure the spatial parameters associated with instrument manipulation. AI and machine learning methods were not applied in this work. Applebaum et al. [17] compared parameters such as the time and number of movements in the process of performing a microsurgical task by plastic surgeons with different levels of experience, using an electromagnetic motion tracking device to record the movement of the surgeon’s hands. This approach to the assessment of microneurosurgical performance stands out for its objectivity and reliability of instrumental measurements, but requires special equipment.

Expert analysis of video images of the surgeon’s work in the operating room is an alternative method for assessing the degree of mastering microsurgical techniques. However, involving an expert assessor in the analysis of such images is a time-consuming and extremely laborious method. Frame-by-frame analysis of microinstrument motion based on video recordings of a simulated surgical performance was applied by Óvári et al. [18]. Attempts to objectively evaluate and categorize the microsurgical effect based on the analysis of a video recording of a microsurgical training were made by Satterwhite et al. [19]. However, the analysis and evaluation of the performance of trained microsurgeons in this work were carried out by the expert assessors by viewing video recordings and grading according to the developed scale, which does not allow leveling the influence of the subjective factor on the results of the analysis.

A promising alternative to these technologies is machine learning methods, computer vision, primarily, for automated evaluation of the effectiveness of macro- and microsurgical performance. These methods can be applied on the base of the detection and analysis of microsurgical instrument motion in the surgical wound. After analyzing the limited scientific literature on this topic, we summarized the main processes for obtaining data for the analysis of microsurgical procedures using machine learning (Table 1).

|

Table 1. Features of data mining process used in machine learning, for the analysis of microsurgical manipulations |

The few scientific literature data indicate that machine learning methods allow to identify complex relationships in the movement patterns of a microsurgeon and predict the parameters of the effectiveness of microsurgical performance. To implement these tasks, the first step is to train the model to correctly classify the motion and the microsurgical instrument itself in the surgery video. The ongoing research studies in this direction are mostly focused on teaching computers two main functions: determining the phase of a surgical operation and identifying a surgical instrument [20].

In works on microsurgery using machine learning, two types of data sources are most often used: these are video recordings of surgery [21] and a set of variables that are obtained from sensors attached to microinstruments or on the body of the operating surgeon. Some studies combine both sources [22].

In the study by Markarian et al. [21], the RetinaNet, a deep learning model was created for the identification, localization, and annotation of surgical instruments based on intraoperative video recordings of endoscopic endonasal operations. According to the findings of the study, the developed model was able to successfully identify and correctly classify surgical instruments. However, all the instruments in the work belonged to the same class — “instruments”.

An interesting study was carried out by Pangal et al. [23]. In this work, the authors evaluated the ability of a deep neural network (DNN) to predict blood loss and damage to the internal carotid artery based on the 1-minute video data obtained from a validated neurosurgical simulator for endonasal neurosurgery. The prediction results of the model and expert assessors coincided in the vast majority of cases.

In the work by McGoldrick et al. [24], researchers used video recordings made directly from the camera of the operating microscope and the ProAnalyst software to analyze the smoothness of movements of a vascular microsurgeon performing microanastamosis, using a logistic regression model and a cubic spline.

Franco-González et al. [25] designed a stereoscopic system with two cameras that recorded images from different angles of surgical tweezers. The 3D motion tracking software was created using the C++ programming language and the OpenCV 3.4.11 library.

Oliveira et al. [26] showed in their work that the use of machine learning and computer vision in the simulation of microsurgical operations provides enhancing basic skills of both residents and experts with extensive experience.

The use of neural networks with long short-term memory (LSTM) in the analysis algorithms became a major advance in the process of surgical phase recognition, which made it possible to improve the accuracy of determining a surgical phase up to 85–90%.

It is important to note that due to typical data volume limitations, model developers often use the so-called transfer learning [27], which allows the model to be pre-trained on the same data (most often, on open sets that solve similar problems in the same subject area) and then retrain on others, on which the target problem is solved. Currently, the following sets of open data are known, which are used in solving problems related to assessing the accuracy of surgical operations:

EndoVis Challenge datasets are a collection of labeled datasets that contain videos of various types of surgical operations for classification, segmentation, detection, localization, etc. [28];

Cholec80 contains 80 videos of endoscopic operations performed by 13 different surgeons; all videos are labeled taking into account the phases of operations and the presence of instruments in the frame [29];

MICCAI challenge datasets are ones that allow a large number of contests in the analysis of medical data, including the analysis of surgical materials [30];

JHU-ISI and JIGSAWS, a labeled dataset of video recordings of operations performed by eight surgeons having three skill levels who performed a total of 103 basic robotic laboratory tests [31];

ATLAS Dione have 99 videos of 6 types of surgeries performed by 10 different surgeons using the da Vinci Surgical System. The frame size is 854×480 pixels, each of which is labeled for the presence of surgical instruments in the frame [32].

Theoretically, hundreds and thousands of videos can be used to analyze them using machine learning methods. However, to train the model, it is necessary to view and perform video image labeling in the “manual mode”, which requires a lot of time. A possible solution to this problem is the use of new algorithms that provide annotating video files independently [33].

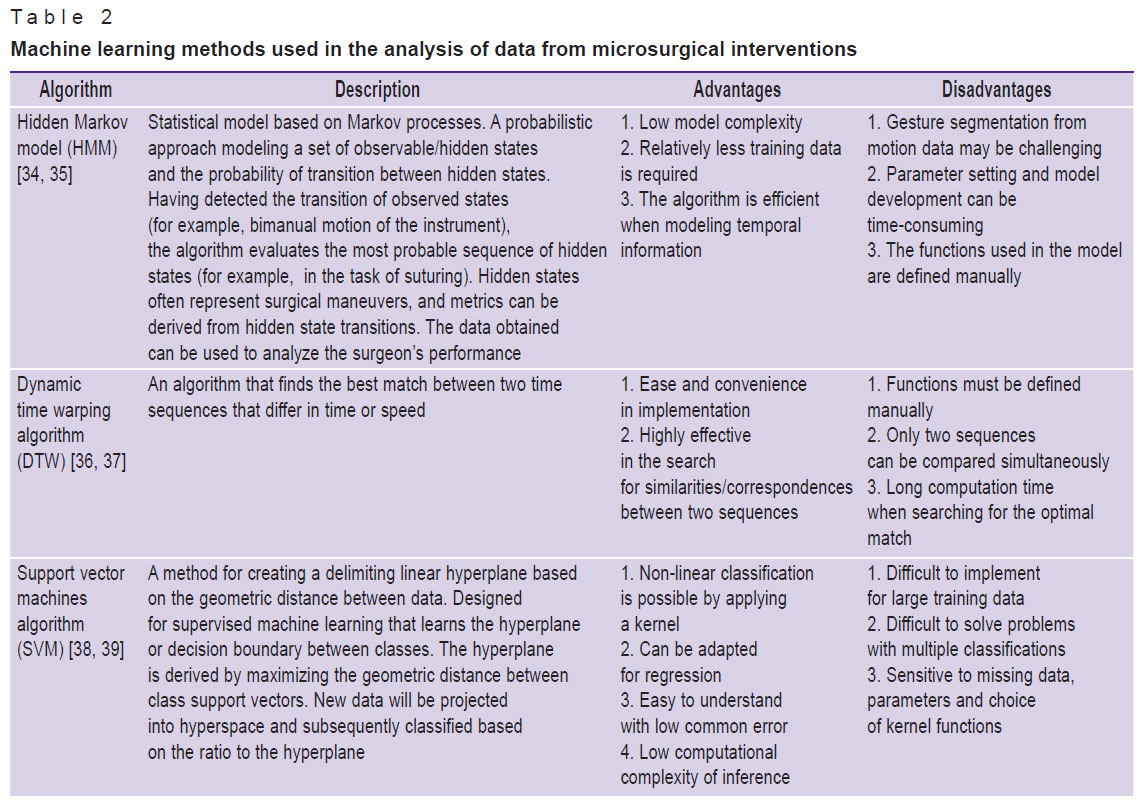

Table 2 shows a list of machine learning methods used, according to scientific literature, in the analysis of video images of microsurgical interventions, with a brief description of them.

|

Table 2. Machine learning methods used in the analysis of data from microsurgical interventions |

Most studies with the use of AI for the analysis of microneurosurgical performance were conducted on models of the simplest surgical procedures, separate elementary phases of operations (for example, suturing, making incisions). Certainly, pilot studies in this area typically start with simplified models. However, surgery is a complex set of various factors that affect the surgical technique and the results of manipulations which are difficult to take into account during an experiment. And, therefore, the transfer of machine learning models from experimental conditions to real practice cannot ensure high quality-work, thus reducing their value.

Conclusion

Despite the rapid development of machine learning methods in the field of clinical medicine, they are in the initial phase of approbation in the tasks of evaluating microsurgical techniques so far, and they do not seem to be introduced into everyday clinical practice in the nearest future. However, there are all grounds to believe that the use of machine learning technologies, computer vision in particular, in microsurgery has a good potential to improve the process of learning microsurgical techniques. And this serves a good prerequisite for the development of a special area of artificial intelligence in the field of microneurosurgery.

Study funding. The study was supported by a grant from the Russian Science Foundation, project No.22-75-10117.

Conflicts of interest. The authors declare no conflicts of interest.

References

- Jian A., Jang K., Manuguerra M., Liu S., Magnussen J., Di Ieva A. Machine learning for the prediction of molecular markers in glioma on magnetic resonance imaging: a systematic review and meta-analysis. Neurosurgery 2021; 89(1): 31–44, https://doi.org/10.1093/neuros/nyab103.

- Litvin A.A., Burkin D.A., Kropinov A.A., Paramzin F.N. Radiomics and digital image texture analysis in oncology (review). Sovremennye tehnologii v medicine 2021; 13(2): 97, https://doi.org/10.17691/stm2021.13.2.11.

- Ning Z., Luo J., Xiao Q., Cai L., Chen Y., Yu X., Wang J., Zhang Y. Multi-modal magnetic resonance imaging-based grading analysis for gliomas by integrating radiomics and deep features. Ann Transl Med 2021; 9(4): 298, https://doi.org/10.21037/atm-20-4076.

- Lambin P., Rios-Velazquez E., Leijenaar R., Carvalho S., van Stiphout R.G., Granton P., Zegers C.M., Gillies R., Boellard R., Dekker A., Aerts H.J. Radiomics: extracting more information from medical images using advanced feature analysis. Eur J Cancer 2012; 48(4): 441–446, https://doi.org/10.1016/j.ejca.2011.11.036.

- Habib A., Jovanovich N., Hoppe M., Ak M., Mamindla P., Colen R.R., Zinn P.O. MRI-based radiomics and radiogenomics in the management of low-grade gliomas: evaluating the evidence for a paradigm shift. J Clin Med 2021; 10(7): 1411, https://doi.org/10.3390/jcm10071411.

- Cho H.H., Lee S.H., Kim J., Park H. Classification of the glioma grading using radiomics analysis. PeerJ 2018; 6: e5982, https://doi.org/10.7717/peerj.5982/supp-3.

- Su C., Jiang J., Zhang S., Shi J., Xu K., Shen N., Zhang J., Li L., Zhao L., Zhang J., Qin Y., Liu Y., Zhu W. Radiomics based on multicontrast MRI can precisely differentiate among glioma subtypes and predict tumour-proliferative behaviour. Eur Radiol 2019; 29(4): 1986–1996, https://doi.org/10.1007/s00330-018-5704-8.

- Cao X., Tan D., Liu Z., Liao M., Kan Y., Yao R., Zhang L., Nie L., Liao R., Chen S., Xie M. Differentiating solitary brain metastases from glioblastoma by radiomics features derived from MRI and 18F-FDG-PET and the combined application of multiple models. Sci Rep 2022; 12(1): 5722, https://doi.org/10.1038/s41598-022-09803-8.

- Qian J., Herman M.G., Brinkmann D.H., Laack N.N., Kemp B.J., Hunt C.H., Lowe V., Pafundi D.H. Prediction of MGMT status for glioblastoma patients using radiomics feature extraction from 18F-DOPA-PET imaging. Int J Radiat Oncol Biol Phys 2020; 108(5): 1339–1346, https://doi.org/10.1016/j.ijrobp.2020.06.073.

- Witten A.J., Patel N., Cohen-Gadol A. Image segmentation of operative neuroanatomy into tissue categories using a machine learning construct and its role in neurosurgical training. Oper Neurosurg (Hagerstown) 2022; 23(4): 279–286, https://doi.org/10.1227/ons.0000000000000322.

- Likhterman L.B. Healing: standards and art. Nejrohirurgia 2020; 22(2): 105–108, https://doi.org/10.17650/1683-3295-2020-22-2-105-108.

- Gusev E.I., Burd G.S., Konovalov A.N. Nevrologiya i neyrokhirurgiya [Neurology and neurosurgery]. Meditsina; 2000; URL: http://snsk.az/snsk/file/2013-05-9_11-31-06.pdf.

- Krylov V.V., Konovalov A.N., Dash’yan V.G., Kondakov E.N., Tanyashin S.V., Gorelyshev S.K., Dreval’ O.N., Grin’ A.A., Parfenov V.E., Kushniruk P.I., Gulyaev D.A., Kolotvinov V.S., Rzaev D.A., Poshataev K.E., Kravets L.Ya., Mozheiko R.A., Kas’yanov V.A., Kordonskii A.Yu., Trifonov I.S., Kalandari A.A., Shatokhin T.A., Airapetyan A.A., Dalibaldyan V.A., Grigor’ev I.V., Sytnik A.V. Neurosurgery in Russian Federation. Voprosy neirokhirurgii im. N.N. Burdenko 2017; 81(1): 5–12, https://doi.org/10.17116/neiro20178075-12.

- Bykanov A., Kiryushin M., Zagidullin T., Titov O., Rastvorova O. Effect of energy drinks on microsurgical hand tremor. Plast Reconstr Surg Glob Open 2021; 9(4): e3544, https://doi.org/10.1097/gox.0000000000003544.

- Coulson C.J., Slack P.S., Ma X. The effect of supporting a surgeon’s wrist on their hand tremor. Microsurgery 2010; 30(7): 565–568, https://doi.org/10.1002/micr.20776.

- Harada K., Morita A., Minakawa Y., Baek Y.M., Sora S., Sugita N., Kimura T., Tanikawa R., Ishikawa T., Mitsuishi M. Assessing microneurosurgical skill with medico-engineering technology. World Neurosurg 2015; 84(4): 964–971, https://doi.org/10.1016/j.wneu.2015.05.033.

- Applebaum M.A., Doren E.L., Ghanem A.M., Myers S.R., Harrington M., Smith D.J. Microsurgery competency during plastic surgery residency: an objective skills assessment of an integrated residency training program. Eplasty 2018;18: e25.

- Óvári A., Neményi D., Just T., Schuldt T., Buhr A., Mlynski R., Csókay A., Pau H.W., Valálik I. Positioning accuracy in otosurgery measured with optical tracking. PLoS One 2016; 11(3): e0152623, https://doi.org/10.1371/journal.pone.0152623.

- Satterwhite T., Son J., Carey J., Echo A., Spurling T., Paro J., Gurtner G., Chang J., Lee G.K. The Stanford Microsurgery and Resident Training (SMaRT) scale: validation of an on-line global rating scale for technical assessment. Ann Plast Surg 2014; 72(Suppl 1): S84–S88, https://doi.org/10.1097/sap.0000000000000139.

- Ward T.M., Mascagni P., Ban Y., Rosman G., Padoy N., Meireles O., Hashimoto D.A. Computer vision in surgery. Surgery 2021; 169(5): 1253–1256, https://doi.org/10.1016/j.surg.2020.10.039.

- Markarian N., Kugener G., Pangal D.J., Unadkat V., Sinha A., Zhu Y., Roshannai A., Chan J., Hung A.J., Wrobel B.B., Anandkumar A., Zada G., Donoho D.A. Validation of machine learning-based automated surgical instrument annotation using publicly available intraoperative video. Oper Neurosurg (Hagerstown) 2022; 23(3): 235–240, https://doi.org/10.1227/ons.0000000000000274.

- Jin A., Yeung S., Jopling J., Krause J., Azagury D., Milstein A., Fei-Fei L. Tool detection and operative skill assessment in surgical videos using region-based convolutional neural networks. In: Proc 2018 IEEE Winter Conf Appl Comput Vision WACV 2018; p. 691–699, https://doi.org/10.1109/wacv.2018.00081.

- Pangal D.J., Kugener G., Zhu Y., Sinha A., Unadkat V., Cote D.J., Strickland B., Rutkowski M., Hung A., Anandkumar A., Han X.Y., Papyan V., Wrobel B., Zada G., Donoho D.A. Expert surgeons and deep learning models can predict the outcome of surgical hemorrhage from 1 min of video. Sci Rep 2022; 12(1): 8137, https://doi.org/10.1038/s41598-022-11549-2.

- McGoldrick R.B., Davis C.R., Paro J., Hui K., Nguyen D., Lee G.K. Motion analysis for microsurgical training: objective measures of dexterity, economy of movement, and ability. Plast Reconstr Surg 2015; 136(2): 231e–240e, https://doi.org/10.1097/prs.0000000000001469.

- Franco-González I.T., Pérez-Escamirosa F., Minor-Martínez A., Rosas-Barrientos J.V., Hernández-Paredes T.J. Development of a 3D motion tracking system for the analysis of skills in microsurgery. J Med Syst 2021; 45(12): 106, https://doi.org/10.1007/s10916-021-01787-8.

- Oliveira M.M., Quittes L., Costa P.H.V., Ramos T.M., Rodrigues A.C.F., Nicolato A., Malheiros J.A., Machado C. Computer vision coaching microsurgical laboratory training: PRIME (Proficiency Index in Microsurgical Education) proof of concept. Neurosurg Rev 2022; 45(2): 1601–1606, https://doi.org/10.1007/s10143-021-01663-6.

- Wang J., Zhu H., Wang S.H., Zhang Y.D. A review of deep learning on medical image analysis. Mob Networks Appl 2020; 26: 351–380, https://doi.org/10.1007/s11036-020-01672-7.

- Du X., Kurmann T., Chang P.L., Allan M., Ourselin S., Sznitman R., Kelly J.D., Stoyanov D. Articulated multi-instrument 2-D pose estimation using fully convolutional networks. IEEE Trans Med Imaging 2018; 37(5): 1276–1287, https://doi.org/10.1109/tmi.2017.2787672.

- Twinanda A.P., Shehata S., Mutter D., Marescaux J., de Mathelin M., Padoy N. EndoNet: a deep architecture for recognition tasks on laparoscopic videos. IEEE Trans Med Imaging 2017; 36(1): 86–97, https://doi.org/10.1109/tmi.2016.2593957.

- Commowick O., Kain M., Casey R., Ameli R., Ferré J.C., Kerbrat A., Tourdias T., Cervenansky F., Camarasu-Pop S., Glatard T., Vukusic S., Edan G., Barillot C., Dojat M., Cotton F. Multiple sclerosis lesions segmentation from multiple experts: the MICCAI 2016 challenge dataset. Neuroimage 2021; 244: 118589, https://doi.org/10.1016/j.neuroimage.2021.118589.

- Gao Y., Vedula S.S., Reiley C.E., Ahmidi N., Varadarajan B., Lin H.C., Tao L., Zappella L., Béjar B., Yuh D.D., Chen C.C.G., Vidal R., Khudanpur S., Hager G.D. JHU-ISI Gesture And Skill Assessment Working Set (JIGSAWS): a surgical activity dataset for human motion modeling. 2022. URL: https://cirl.lcsr.jhu.edu/wp-content/uploads/2015/11/JIGSAWS.pdf.

- Sarikaya D., Corso J.J., Guru K.A. Detection and localization of robotic tools in robot-assisted surgery videos using deep neural networks for region proposal and detection. IEEE Trans Med Imaging 2017; 36(7): 1542–1549, https://doi.org/10.1109/tmi.2017.2665671.

- Yu T., Mutter D., Marescaux J., Padoy N. Learning from a tiny dataset of manual annotations: a teacher/student approach for surgical phase recognition. arXiv; 2018, https://doi.org/10.48550/arxiv.1812.00033.

- Ahmidi N., Poddar P., Jones J.D., Vedula S.S., Ishii L., Hager G.D., Ishii M. Automated objective surgical skill assessment in the operating room from unstructured tool motion in septoplasty. Int J Comput Assist Radiol Surg 2015; 10(6): 981–991, https://doi.org/10.1007/s11548-015-1194-1.

- Rosen J., Hannaford B., Richards C.G., Sinanan M.N. Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills. IEEE Trans Biomed Eng 2001; 48(5): 579–591, https://doi.org/10.1109/10.918597.

- Jiang J., Xing Y., Wang S., Liang K. Evaluation of robotic surgery skills using dynamic time warping. Comput Methods Programs Biomed 2017; 152: 71–83, https://doi.org/10.1016/j.cmpb.2017.09.007.

- Peng W., Xing Y., Liu R., Li J., Zhang Z. An automatic skill evaluation framework for robotic surgery training. Int J Med Robot 2019; 15(1): e1964, https://doi.org/10.1002/rcs.1964.

- Poursartip B., LeBel M.E., McCracken L.C., Escoto A., Patel R.V., Naish M.D., Trejos A.L. Energy-based metrics for arthroscopic skills assessment. Sensors (Basel) 2017; 17(8): 1808, https://doi.org/10.3390/s17081808.

- Gorantla K.R., Esfahani E.T. Surgical skill assessment using motor control features and hidden Markov model. Annu Int Conf IEEE Eng Med Biol Soc 2019; 2019: 5842–5845, https://doi.org/10.1109/embc.2019.8857629.

- Bissonnette V., Mirchi N., Ledwos N., Alsidieri G., Winkler-Schwartz A., Del Maestro R.F.; Neurosurgical Simulation & Artificial Intelligence Learning Centre. Artificial intelligence distinguishes surgical training levels in a virtual reality spinal task. J Bone Joint Surg Am 2019; 101(23): e127, https://doi.org/10.2106/jbjs.18.01197.

- Winkler-Schwartz A., Yilmaz R., Mirchi N., Bissonnette V., Ledwos N., Siyar S., Azarnoush H., Karlik B., Del Maestro R. Machine learning identification of surgical and operative factors associated with surgical expertise in virtual reality simulation. JAMA Netw Open 2019; 2(8): e198363, https://doi.org/10.1001/jamanetworkopen.2019.8363.

- Hung A.J., Chen J., Che Z., Nilanon T., Jarc A., Titus M., Oh P.J., Gill I.S., Liu Y. Utilizing machine learning and automated performance metrics to evaluate robot-assisted radical prostatectomy performance and predict outcomes. J Endourol 2018; 32(5): 438–444, https://doi.org/10.1089/end.2018.0035.

- Baghdadi A., Hussein A.A., Ahmed Y., Cavuoto L.A., Guru K.A. A computer vision technique for automated assessment of surgical performance using surgeons console-feed videos. Int J Comput Assist Radiol Surg 2019; 14(4): 697–707, https://doi.org/10.1007/s11548-018-1881-9.

- Yamaguchi T., Suzuki K., Nakamura R. Development of a visualization and quantitative assessment system of laparoscopic surgery skill based on trajectory analysis from USB camera image. Int J Comput Assist Radiol Surg 2016; 11(suppl): S254–S256.

- Weede O., Möhrle F., Wörn H., Falkinger M., Feussner H. Movement analysis for surgical skill assessment and measurement of ergonomic conditions. In: 2014 2nd International Conference on Artificial Intelligence, Modelling and Simulation. IEEE; 2014; p. 97–102, https://doi.org/10.1109/aims.2014.69.

- Kelly J.D., Petersen A., Lendvay T.S., Kowalewski T.M. Bidirectional long short-term memory for surgical skill classification of temporally segmented tasks. Int J Comput Assist Radiol Surg 2020; 15(12): 2079–2088, https://doi.org/10.1007/s11548-020-02269-x.

- Gahan J., Steinberg R., Garbens A., Qu X., Larson E. MP34-06 machine learning using a multi-task convolutional neural networks can accurately assess robotic skills. J Urol 2020; 203(Suppl 4): e505, https://doi.org/10.1097/ju.0000000000000878.06.

- Liu Y., Zhao Z., Shi P., Li F. Towards surgical tools detection and operative skill assessment based on deep learning. IEEE Trans Med. Robot Bionics 2022; 4(1): 62–71, https://doi.org/10.1109/tmrb.2022.3145672.